Multi-Threading on JuliaHub

What is Multi-threading?

Multi-threading is a programming and execution model that allows multiple threads to run concurrently within a single process. Threads are sequences of instructions that can be executed independently by a CPU core. Multi-threading enables a program to perform multiple tasks simultaneously, improving overall performance and responsiveness.

In a single-threaded program, the CPU executes instructions sequentially, one after the other1. However, in a multi-threaded program, different threads can execute different parts of the code at the same time - improving performance. This improved performance can come with many challenges including data synchronization, increased complexity, and overhead. However, Julia provides mechanisms that offer an easy to use, composable, and efficient multi-threading model.

Writing Multi-threaded Julia Programs

Julia’s threading interface offers general task parallelism inspired by the likes of Go and other powerful parallel programming systems. Similar to Go, Julia provides built-in types for a Task and Channel. Julia differs from Go in its higher-level facilities. For instance, it is possible to use macros such as Threads.@spawn or Threads.@threads in order to automate the procedure of creating tasks, scheduling them for execution, and assigning them to threads available in the pool. This expressiveness combined with its rich underlying type system differentiates Julia in the area of parallel computing, and its flexibility is a contributing factor to it being well-received in the scientific community where modern hardware and parallel compute are crucial for many projects.

Take a look at the example below to get started.

function say(word)

for i in eachindex(1:5)

sleep(1)

println(

word,

"\tthread: $(Threads.threadid())"

)

end

end

Line 1

function named say which takes 1 input argument word

Line 2

iterate 5 times

Line 3

one second delay

Line 5

display the given word

Line 6

display the ID of the execution thread

say (generic function with 1 method)

Calling this function twice in sequence takes 10 seconds.

@elapsed begin

say("hello")

say("world")

end

hello thread: 1

hello thread: 1

hello thread: 1

hello thread: 1

hello thread: 1

world thread: 1

world thread: 1

world thread: 1

world thread: 1

world thread: 1

10.013437892

CUDA.devices()

The first say call can be executed on a separate thread with the @spawn macro.

@elapsed begin

Threads.@spawn say("hello")

say("world")

end

world thread: 1

hello thread: 2

world thread: 1

hello thread: 2

world thread: 1

hello thread: 2

world thread: 1

hello thread: 2

world thread: 1

hello thread: 2

5.006972013

What just happened? By prefixing the function call with Threads.@spawn, the macro created a new task and scheduled it to execute on another available thread. Once this task was scheduled, the second say call was started on the main execution thread. The result is that both function calls were able to progress at the same time in a multi-threaded fashion.

Another option to achieve similar results is the Threads.@threads macro.

@elapsed Threads.@threads for word in ["hello", "world"]

Threads.@threads for i in eachindex(1:5)

sleep(1)

println(word, "\tthread: $(Threads.threadid())")

end

end

Line 1

for each word in the list of words, create a task and schedule it on an available thread

Line 2

for each index in the range, create a task and schedule it on an available thread

Line 3

delay for one second

Line 4

display the given word and the ID of the execution thread

world thread: 1

world thread: 3

hello thread: 5

world thread: 6

hello thread: 2

hello thread: 3

hello thread: 4

hello thread: 2

world thread: 4

world thread: 5

1.114417783

This result was dramatically faster because not only was each say call run on a separate thread, but each iteration of each say call could execute on an available thread. Not only was execution time reduced to about the time it takes to run a single iteration, but all of the available threads were utilized.

Multi-threading on JuliaHub

JuliaHub is a cloud computing platform tailor-made for parallel Julia programs. Follow along to scale-up this “Hello, World” example.

First, login to JuliaHub and navigate to the Multithreading Guide Project. Once there, Launch a Julia IDE and Connect to it.

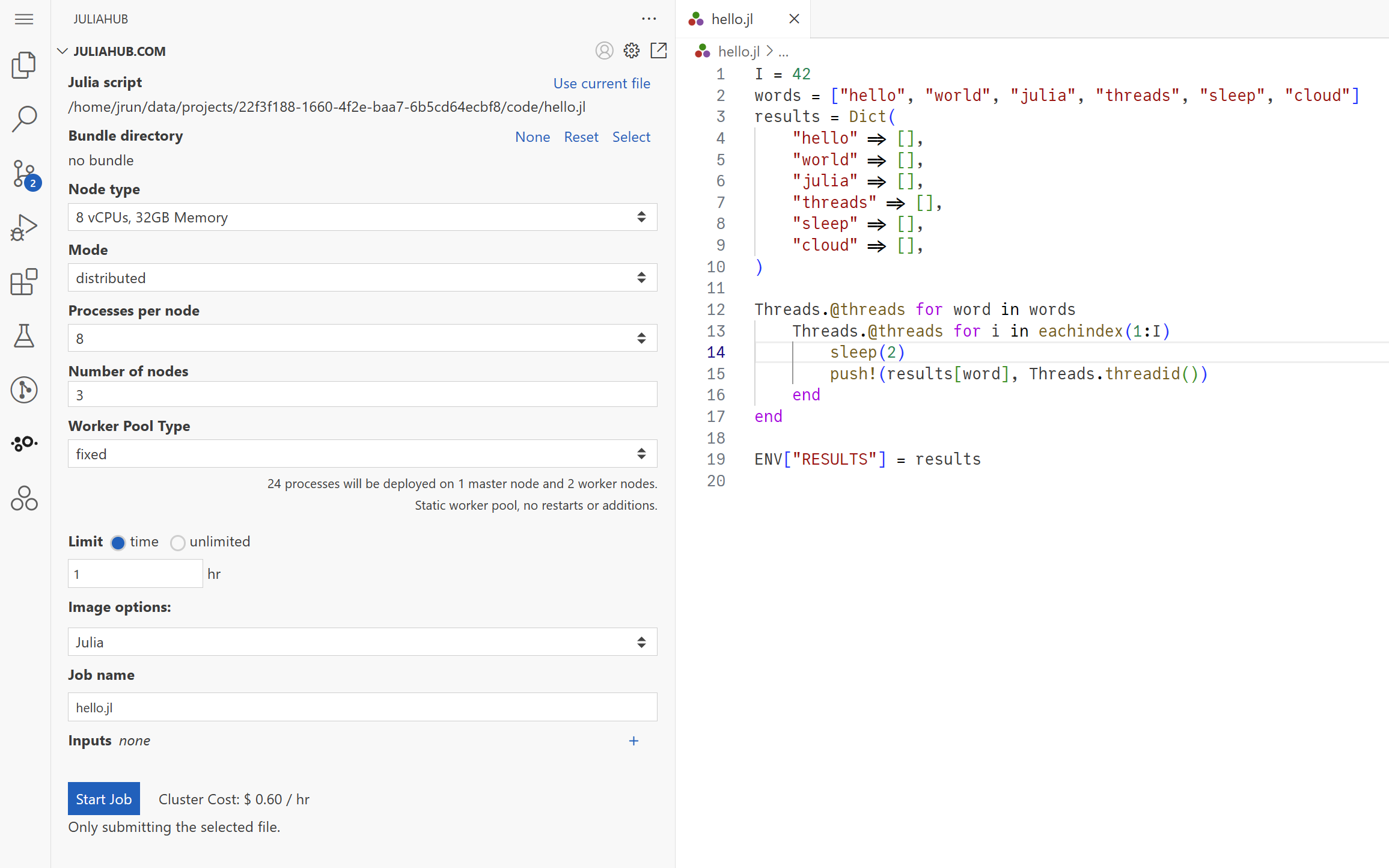

The example similar to the one here is in the hello.jl file - open it, copy the following configuration in the JuliaHub extension and click “Start Job!”

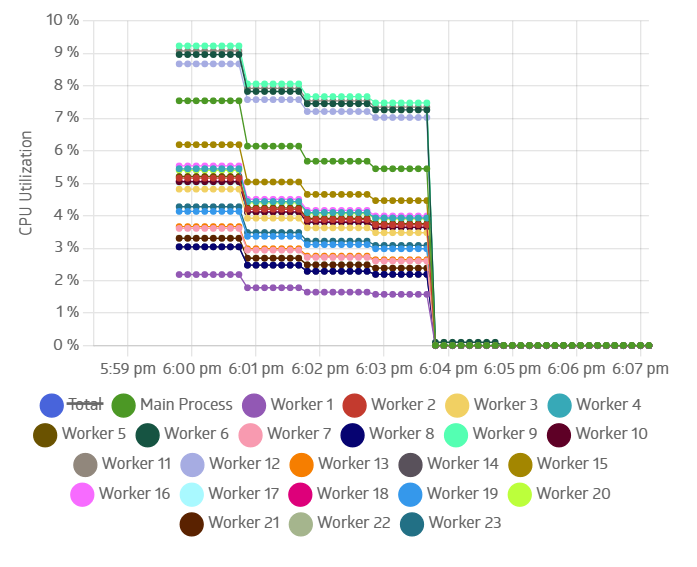

Once complete, you can view the job details and see the resource metrics. Indeed, all the available workers were utilized to complete the job!

This is just the beginning:

- See the Parallel Computing Solution Brief for a deeper dive into parallel computing in Julia

- Stay tuned for upcoming webinars

- And scale up your programs with JuliaHub

To try multi-threading yourself, login to JuliaHub.com for free and launch the JuliaIDE found under the Applications section on the left-side menu.

About the Author

Jacob Vaverka

Access Jacob Vaverka’s content on JuliaHub. Gain insights into computational solutions and innovative programming techniques.