Machine Learning (Keno Fischer Quora Session)

Keno Fischer, Julia Computing Co-Founder and Chief Technology Officer (Tools) participated in a Quora Session March 18-23.

What will machine learning look like 15-20 years from now?

Machine learning is a very rapidly moving field, so it's hard to make predictions about the state of the art 6 months from now, let alone 15 - 20 years. I can however, offer a series of educated guesses based on what I see happening right now.

-

We are still far away from AGI. Current generation machine learning systems are still very far away from something that could legitimately be called artificial "intelligence". The systems we have right now are phenomenal at pattern recognition from lots of data (even reinforcement learning systems are mostly about memorizing and recognizing patterns that worked well during training). This is certainly a necessary step, but it is very far away from an intelligent system. In analogy to human cognition, what we have now is analogous to the subconscious processes that allow split second activation of your sympathetic nervous system when your peripheral vision detects a predator approaching or a former significant other turning around a corner - in other words, pattern-based, semi-automatic decisions that our brain does "in hardware". We don't currently have anything I can see that would resemble intentional thought and I'm not convinced we'll get to it from current generation systems.

-

Traditional programming is not going away. I sometimes hear the claim that most traditional programming will be replaced by machine learning systems. I am highly skeptical of this claim, partly as a corollary to the previous point, but more generally because machine learning isn't required for the vast majority of tasks. Machine learning excels where dealing with the messiness of the real world, lots of data is available and no reasonably fundamental model is available. If any of these are not true, you generally don't have to settle for a machine learning model - the traditional alternatives are superior. A fun way to think about it is that humans are the most advanced natural general intelligence around, but yet we invented computers to do certain specialized tasks we're too slow at. Why should we expect artificial intelligence to be any different?

-

Machine learning will augment most traditional tasks. That being said, any time one of these traditional systems needs to interact with a human, there is an opportunity for machine-learning based augmentation. For example, a programmer looking at error messages could use a machine learning system that looks at the error messages and suggests a course of action. Computers are a lot more patient than humans. You can't make a human watch millions of hours of programming sessions to remember the common resolutions to problems, but you can make a machine learning system do the same. In addition, machine learning systems can learn from massive amounts of data very rapidly and on a global scale. If any instance of the machine learning system has ever encountered a particular set of circumstances, it can almost instantly share that knowledge globally, without being limited to the speed of human communication. I don't think we have yet begun to appreciate the impact of this, but we'll probably see it in another 5-10 years.

-

We'll get better at learning from small or noisy data. At the moment, machine learning systems mostly require large amounts of relatively clean, curated, data sets. There are various promising approaches to relax this requirement in one or the other direction (or combinations of small, well curated data sets to get started with a larger corpus of more noisy data). I expect these to be perfected in the near future.

-

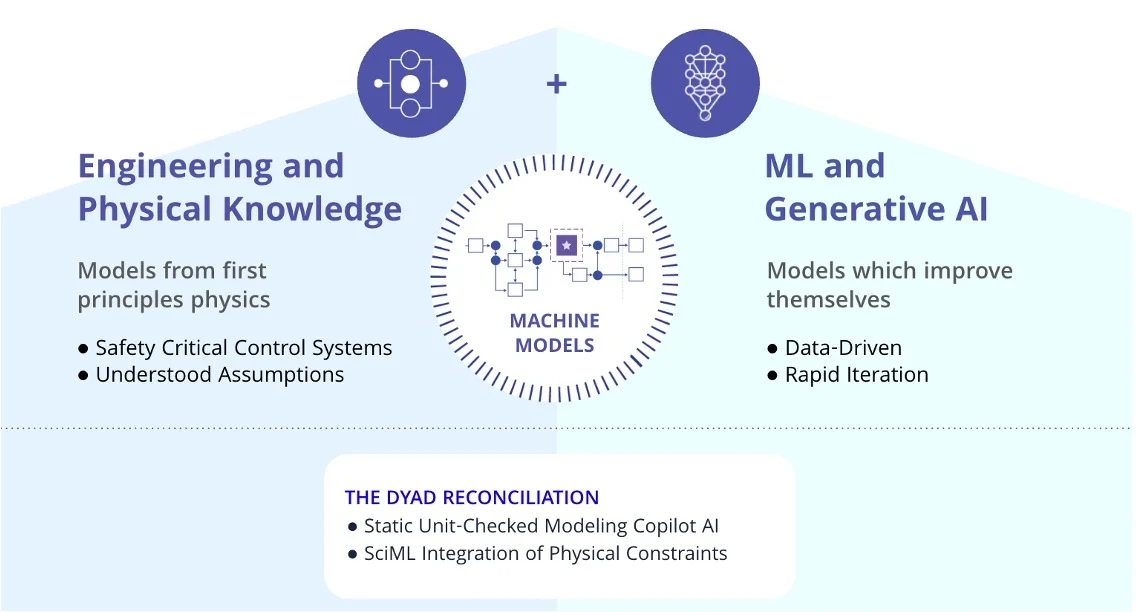

We'll see ML systems be combined with traditional approaches. At the moment it seems fairly common to see ML systems that are entirely made of neural networks trying to solve end to end problems. This often works ok, because you can recover many traditional signal processing techniques from these building blocks (e.g. Fourier transforms, edge detection, segmentation, etc.), but the learned versions of these transforms can be significantly more computationally expensive than the underlying approach. I wouldn't be surprised to see these primitives making a bit of a comeback (as part of a neural network architecture). Similarly, I'm very excited about physics-based ML approaches where you combine a neural network with knowledge of an underlying physical model (e.g. the differential equations governing a certain process) in order to outperform both pure ML approaches and approaches relying solely on the physical process (i.e. simulations).